There is a new sync API available in AZCopy v10 that makes me happy - and its already included in Azure CLI: sync a folder or file to Azure blob storage (or vice versa) - in this post I show you how I use this for my blog!

In version 10 of the AZCopy tool, a new feature was introduced that I was waiting for a long time that solves a simple problem: Sync a folder with Azure blob storage. Everything that is new or changed in a source folder should be uploaded to a target blob storage - and - optionally, files that are no longer required can be removed. Awesomesauce!

And the same API is now available in Azure CLI (version >= 2.0.65, released on May 21, 2019) - the AZCopy v10 tool is included.

With the following line I publish this blog to Azure Storage:

az storage blob sync -c $web –account-name melcherit -s “C:\agent_work\1\s\public”

- –account-name is the storage account name

- -c is the target blob storage container

- -s is the local source folder

Update your Azure CLI (just run the installation again)!

Warning! The Azure CLI command does not allow to specify if destination files should be deleted or not - if you need that control, you have to use the AZCopy v10 tool directly.

The full documentation is here:

My use cases

I use this to deploy this blog to Azure Storage Static Website - see details here - and my deployment did leave files behind that were no longer required. Or in the case I change the case of a file, the change was not replicated to Azure, because Azure blob storage is case-sensitive! That lead to unexpected behavior and simply was not clean. I experimented with deleting the entire container during a deployment, but deleting 2GB files and copying them again, took too long (I am terribly impatient!).

In the past, I used Azure Storage to distribute PowerShell scripts in a customer project - and not being able to sync (especially remove and rename scripts) easily, caused some issues - not great but now solved.

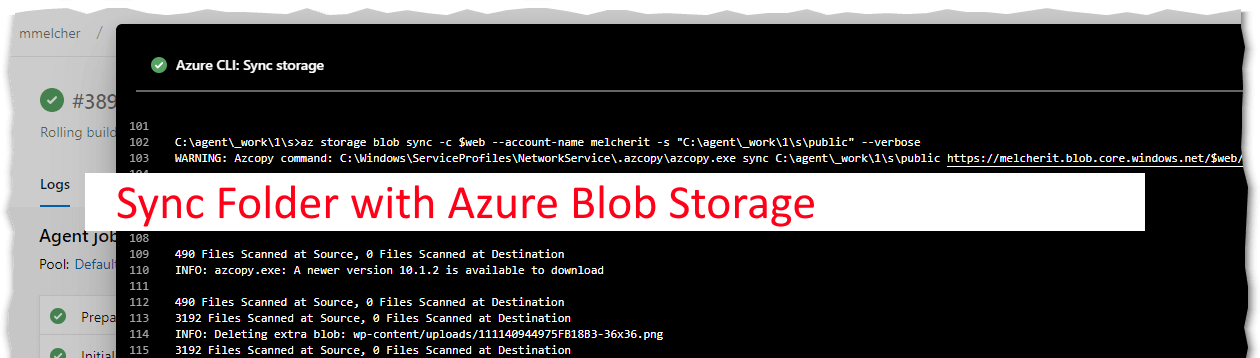

Usage in Azure DevOps

I automated the deployment of my blog (of course!) and use Azure DevOps for that - once I ‘git push’ the changes, the Azure Pipeline compiles my blog and copies the file to Azure Storage. Before that, I used the Azure File Copy task (version 3) - and the sync API wont be supported in this task.

I helped myself by using the AzureCLI task (version 1) with the exact same command I showed you above:

az storage blob sync -c $web –account-name melcherit -s “$(System.DefaultWorkingDirectory)\public”

You can see that during the deployment, both source and target are enumerated and compared. Then files are transferred and deleted - cool. The task needs 22 seconds in my case and compares 3194 files (657MB). The first execution was fun - so much stuff on the blob storage that was no longer required.

Warning! That leads to the second warning: The API is powerful - it might make sense to protect yourself by enabling soft delete so you can recover if something went wrong.

So, what are you waiting for?

Hope it helps,

Max

Share this post

Twitter

Facebook

LinkedIn

Email